S3 Object Storage

Description

Object Storage (S3) is available on the CLOUD → S3 Object Storage tab. It is an all-in-one cloud solution for big data. S3 (from Simple Storage Service) is a high-performance and scalable data warehouse devoid of hierarchy. Within this system, all objects have equal rights, which gives flexibility and allows you to conveniently to store a variety of information for a long time and instantly access it.

The structure of S3 is as simple as possible - there are only three entities (from larger to smaller):

- Storage is where files are stored. Access to the entire repository is granted by a set of keys. For most projects, a single repository is sufficient. But if you have different products with different teams, you can create several repositories.

- Buckets. Buckets in S3 are containers where you can store objects (data files). This is the basic level of data organisation in S3. Buckets are unique within the entire storage. Name lengths range from 3 to 63 characters. Use only lowercase letters, numbers, hyphens, and full stops (but not at the beginning or end of the name). Keep in mind that the name of the bucket is used as part of the URL to access the data. See also S3 Addressing Models for exactly how a URL can be formed to retrieve an object (file).

- Object - key and value in the form of a data file (video, pictures, etc.).

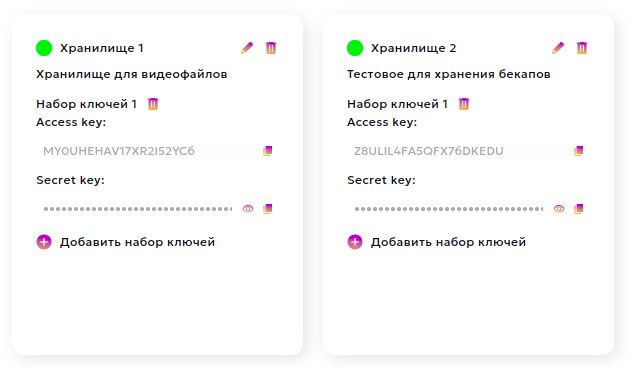

In our cabinet you can create up to 3 storages, see the buckets you created, the volume of objects you loaded and manage access (create, delete keys and storages):

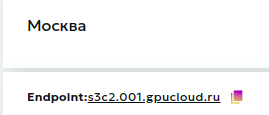

Access to S3 requires only a set of keys, and the endpoint, which is fixed:

Client-side access to S3

S3 is developed based on an API created in Amazon Web Services (AWS) that has become an international standard.

Each object (that is, an arbitrary file with an identifier) in this storage can be retrieved,

using a unique object identifier accessible via HTTP or HTTPS. To work with these objects (files)

you have two options: use a client (either console or GUI),

or use API, the specification of which is described on the site of the protocol authors.

Let's take a closer look at working with popular clients for working with S3-storage: graphical CloudBerry, S3Browser and CyberDuck, and console clients - Rclone and s3cmd.

With the graphical interface:

- CloudBerry – https://www.msp360.com/cloudberry-backup/download/cbes3free/

- S3Browser – https://s3browser.com/

- CyberDuck – https://cyberduck.io/

With the command line:

- Rclone – https://rclone.org/downloads/

- s3cmd – https://s3tools.org/s3cmd/

Compatible clients

CloudBerry

Basic settings

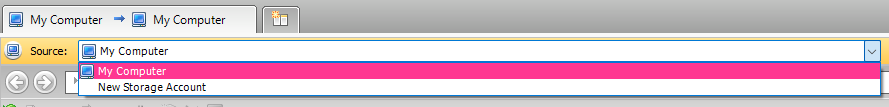

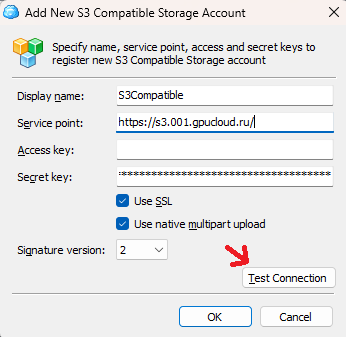

When opening the client to add a user, select Source -> New Storage Account:

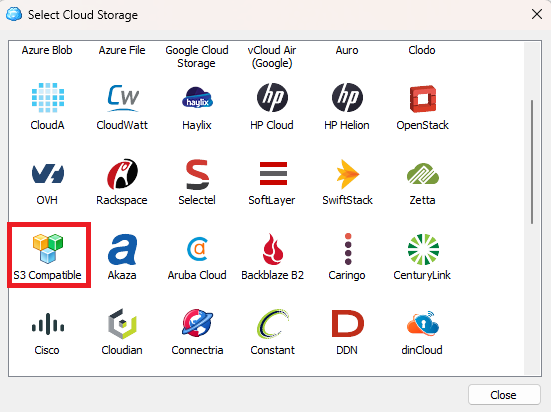

Next comes the prompt to select the storage type, our storage is S3 Compatible:

Next you need to set the username, enter the Service point (this is the storage endpoint, e.g. -

https://s3c2.001.gpucloud.ru/ in Moscow zone) and user keys. After that it is necessary to check

connection with the storage, if the connection is successful, the Connection success window will appear:

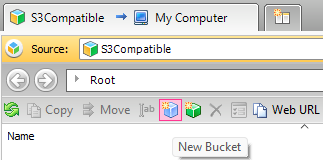

Creating a bucket

To create a bucket, go to the created account and click the New Bucket icon and set the name of the bucket:

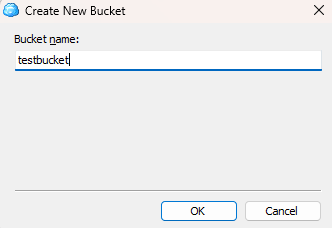

Uploading files

To upload a file from your computer to the storage, you can select

Source -> My computer and drag the desired file into the bucket, or do it directly by dragging the file from File Explorer:

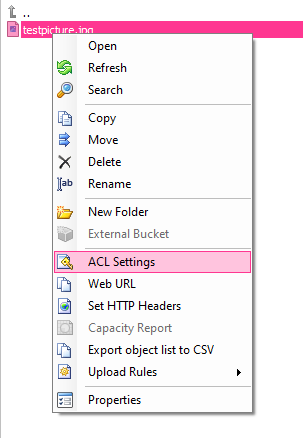

To change access rights to a bucket / folder / file for users, you need to

right-click on the object and select ACL Settings:

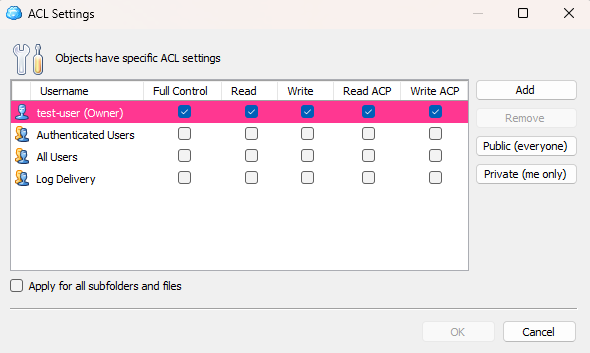

There are buttons to give all users permission to read the file, or make it private:

Read- read the file, view itWrite- modify the fileRead ACP- permission to read rights for different usersWrite ACP- permission to modify permissions for different users

The link to the file will look like this:

https://testbucket.s3c2.001.gpucloud.ru/testpicture.jpg

S3Browser

Basic settings

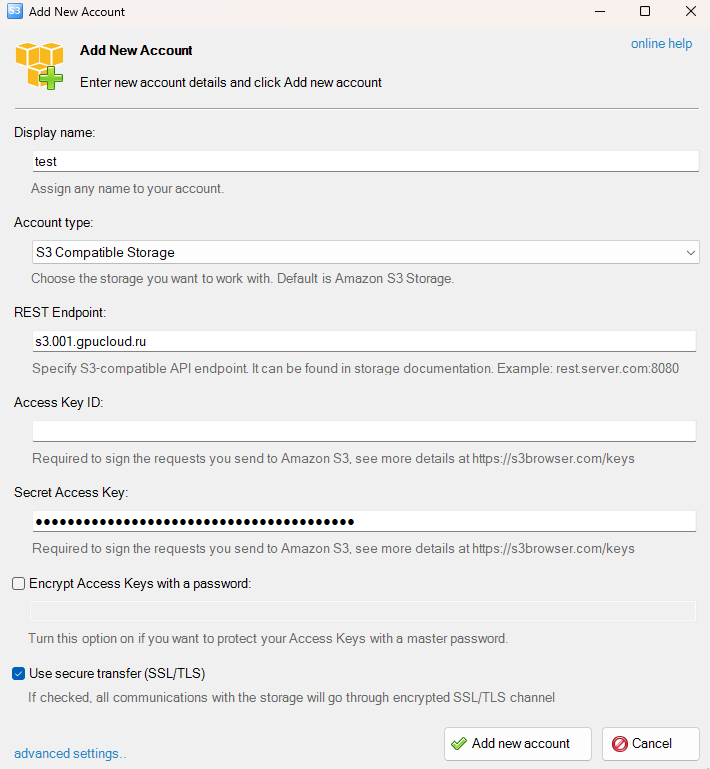

On opening the client offers to add a user, you need to enter a name,

account type (our type is S3 Compatible Storage), REST Endpoint (e.g. s3c2.001.gpucloud.ru),

Access Key and Secret Access Key, click Add new account:

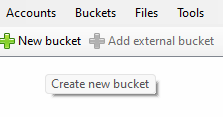

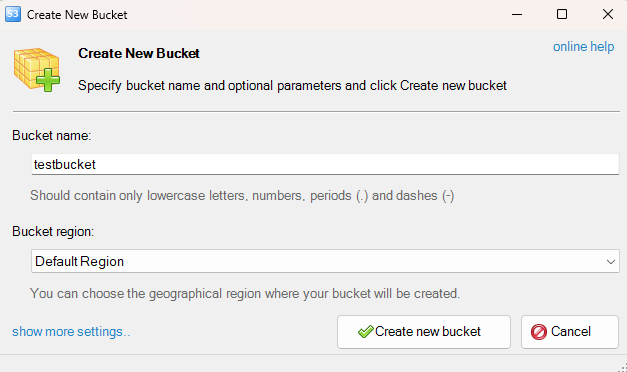

Creating a bucket

To create a bucket, go to the created account and click the New bucket icon and set the name of the bucket:

Uploading files

To upload a file from your computer to the storage, you must select Upload -> Upload file(s)

and select the desired file(s), or do it directly by dragging and dropping the file from File Explorer:

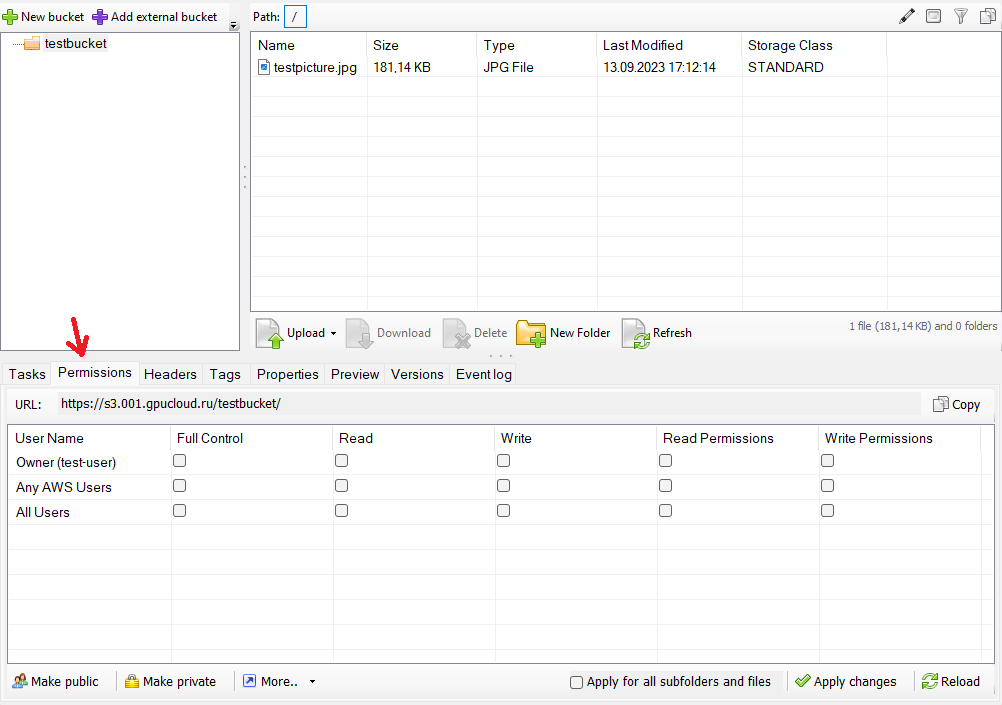

To change access rights to a bucket / folder / file for users, you need to

select the required object and go to the Permissions tab in the bottom menu.

There are buttons at the bottom to give all users permission to read the file, or make it private:

Read- read the file, view itWrite- modify a fileRead Permissions- permission to read permissions for different usersWrite Permissions- permission to modify permissions for different users

The link to the file will look like this:

https://testbucket.s3c2.001.gpucloud.ru/testpicture.jpg

CyberDuck

CyberDuck is an open source client for macOS, Linux and Windows.

The client supports the FTP, SFTP, OpenStack Swift and AmazonS3 protocols.

To install CyberDuck, download the distribution at official website.

Connecting to the bucket

- Connection type: select

Amazon S3. - Server: specify

<endpoint>. The<endpoint>is the storage address, for example:s3c2.001.gpucloud.ru. - Port: 443.

- Access Key ID: the identifier of the

Access Key. - Password: the

Secret Key.

For instructions on further work with CyberDuck, please visit developer's website.

Rclone

Installation

You can download and install Rclone from official website.

Setting up the S3 configuration

Run the rclone config command in a terminal to configure a connection to

your S3 object store. You will need to specify a configuration name, select s3

as the storage type, and follow the prompts to enter the credentials and access parameters for S3.

When asked for the Storage type, select S3 (not Amazon S3) and provide a name.

Uploading Files

After setting up the S3 configuration, you can use the rclone copy command

or rclone sync to upload a file to S3. The syntax of the command would be as follows:

rclone copy /path/to/local/file configuration_name:bucket_name/path_in_S3/

Here:

/path/to/local/fileis the path to the file you want to upload.configuration_nameis the name of the S3 configuration you specified during setup.bucket_nameis the name of the bucket in your S3 storage where you want to upload the file.path_in_S3/is the path in S3 where the file will be placed. You can leave it blank to have the file uploaded to the root of the bucket.

Example command for uploading a file:

rclone copy /path/to/my_file myS3Config:myBucket/

After executing this command, your file will be uploaded to the specified bucket in S3.

s3cmd

The s3cmd client is a simple and easy to use open source client written in Python.

It can be installed on any popular OS. Instructions and the programme itself are available at official site.

Let's look at the installation and usage instructions using Ubuntu as an example.

Use on other operating systems looks the same, and for installation see the corresponding section of the official site.

Installation

- Open a terminal on your

Ubuntu. - Install

s3cmdusing the following command:sudo apt-get update sudo apt-get install s3cmd

Setup

After installation, run the configuration of s3cmd using the command:

s3cmd --configure

You will be asked a few questions to configure your S3 credentials. You will need the following credentials:

- Access Key.

- Secret Key.

- The region of your S3 (e.g., "us-east-1").

- An indication of whether to use encryption (yes/no).

Example of a valid config:

Access Key: *** Secret Key: *** Default Region: us-east-1 S3 Endpoint: s3c2.001.gpucloud.ru DNS-style bucket+hostname:port template for accessing a bucket: %(bucket)s.s3c2.001.gpucloud.ru Encryption password: Path to GPG programme: /usr/bin/gpg Use HTTPS protocol: True HTTP Proxy server name: HTTP Proxy server port: 0

Usage

Once configured, you can use s3cmd to perform various operations on S3 bucket.

Examples of commands:

- Upload a local file to an S3 bucket:

s3cmd put local_file_name s3://bucket_name/path_in_bucket/

- Load the S3 bucket on the local machine:

s3cmd get s3://bucket_name/path_to_file_in_bucket local_directory_name/

- Get a list of files in the S3 bucket:

s3cmd ls s3://bucket_name/

- Deleting a file from the S3 bucket:

s3cmd del s3://bucket_name/path_to_file_in_bucket

These are the basic commands for working with s3cmd.

You can run s3cmd --help to see a complete list of commands and options.

Additional information

S3 Addressing Models

Now we support two addressing models for HTTP(S) access to S3 storage:

Path-style- a model in which the name of the bucket is specified in the path to the object in the URI path, e.g.: https://s3c2.001.gpucloud.ru/some-bucket/some-text-file.txt.Virtual-hosted style- a model in which the name of the bucket is included in the host address (hostname), for example: https://some-bucket.s3c2.001.gpucloud.ru/some-text-file.txt

We recommend using Virtual-hosted style, especially for accessing files in conjunction with CDN when specifying origins in the resource.

When creating a CDN resource with S3-domain it will be necessary to specify the allowed bucket, for this purpose the corresponding field will appear.

Large files

Files larger than 5GB cannot be copied within S3 due to technical cache limitations.

It is better to use multi-part upload to copy large files.